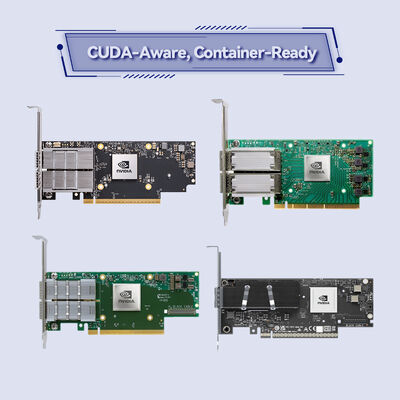

NVIDIA ConnectX-7 MCX755106AS-HEAT: 400G Dual-Port Smart Network Adapter

جزئیات محصول:

| نام تجاری: | Mellanox |

| شماره مدل: | MCX755106AS-HEAT (900-9X7AH-0078-DTZ) |

| مدرک: | Connectx-7 infiniband.pdf |

پرداخت:

| مقدار حداقل تعداد سفارش: | 1 عدد |

|---|---|

| قیمت: | Negotiate |

| جزئیات بسته بندی: | جعبه بیرونی |

| زمان تحویل: | بر اساس موجودی |

| شرایط پرداخت: | T/T |

| قابلیت ارائه: | تهیه توسط پروژه/دسته |

|

اطلاعات تکمیلی |

|||

| مدل شماره: | MCX755106AS-HEAT (900-9X7AH-0078-DTZ) | پورت ها: | 2-پورت |

|---|---|---|---|

| تکنولوژی: | بی نهایت | نوع رابط: | OSFP56 |

| مشخصات: | 16.7cm x 6.9cm | مبدا: | هند / اسرائیل / چین |

| نرخ انتقال: | 200 گرم | رابط میزبان: | gen3 x16 |

| برجسته کردن: | NVIDIA ConnectX-7 400G network adapter,Mellanox dual-port smart NIC,ConnectX-7 network card with heatsink,Mellanox dual-port smart NIC,ConnectX-7 network card with heatsink |

||

توضیحات محصول

NVIDIA ConnectX-7 MCX755106AS-HEAT: 400G Dual-Port Smart Network Adapter

The NVIDIA ConnectX-7 MCX755106AS-HEAT is an advanced dual-port network interface card designed for next-generation data center infrastructure. This high-performance network card supports both InfiniBand and Ethernet protocols, delivering up to 400Gb/s throughput per port. Engineered specifically for AI clusters, high-performance computing, and hyperscale cloud environments, it provides hardware-accelerated networking, storage, and security functions. Known in the market as mq9700, this adapter delivers the ultra-low latency and extreme bandwidth required for demanding data-intensive workloads.

Product Overview

The NVIDIA ConnectX-7 MCX755106AS-HEAT represents the pinnacle of smart NIC card technology, offering unprecedented performance for modern data centers. It combines cutting-edge networking capabilities with comprehensive hardware acceleration to optimize server efficiency, reduce CPU overhead, and maximize data transfer speeds. This dual-port adapter is particularly valuable for environments where network performance directly impacts application results, such as distributed AI training and real-time analytics.

Key Characteristics

- Dual-Protocol Flexibility: Seamlessly operates in both InfiniBand and Ethernet network environments.

- Maximum Bandwidth: Supports up to 400Gb/s per port with NDR InfiniBand and 400GbE connectivity.

- Comprehensive Offload Engines: Hardware acceleration for networking protocols, storage access (NVMe-oF, GPUDirect Storage), and security encryption.

- Advanced RDMA Implementation: Full support for RDMA over Converged Ethernet (RoCE) and native InfiniBand RDMA for direct server-to-server communication.

- In-Network Computing: Integrated NVIDIA SHARP™ technology for offloading collective operations in HPC and AI clusters.

- Precision Timing: Delivers nanosecond-accurate synchronization with IEEE 1588 PTP support for time-sensitive applications.

Core Technologies & Standards

- Networking Protocols: Compliant with InfiniBand Trade Association 1.5, Ethernet IEEE 802.3 (10G-400GbE), RoCE v2, and overlay protocols (VXLAN, GENEVE).

- Host Interface: PCI Express 5.0 x16 interface, compatible with latest generation server platforms.

- Security Acceleration: Inline hardware encryption/decryption for IPsec, TLS 1.3, and MACsec using AES-GCM 128/256-bit keys.

- Virtualization Support: SR-IOV (Single Root I/O Virtualization) for efficient virtual machine network connectivity.

- Management Standards: Supports NC-SI, Redfish, and PLDM for comprehensive device and firmware management.

Working Principle

The ConnectX-7 NIC card operates as an intelligent data processing unit within the server's PCIe architecture. It offloads network, storage, and security processing from the host CPU to dedicated hardware engines, significantly reducing system latency and CPU utilization. Utilizing Remote Direct Memory Access (RDMA), it enables direct, kernel-bypass communication between application memory spaces across the network. Its programmable data path handles complex packet processing, network encapsulation, and security protocols at line rate, ensuring optimal performance for both virtualized and bare-metal deployments. The mq9700 adapter's architecture is designed to minimize data movement and maximize processing efficiency.

Application Scenarios

- AI and Machine Learning Infrastructure: Accelerates distributed model training by providing high-bandwidth, low-latency connectivity between GPU clusters.

- High-Performance Computing: Essential for scientific research, computational fluid dynamics, and genomic analysis requiring massive parallel data exchange.

- Cloud and Hyperscale Data Centers: Enables high-density virtualization, software-defined networking, and efficient storage disaggregation architectures.

- Financial Services and Trading: Provides microsecond latency and precise time synchronization for algorithmic trading and real-time risk analysis.

- Enterprise Storage Acceleration: Optimizes performance for NVMe-over-Fabrics and GPUDirect Storage implementations.

Specifications

| Attribute | Specification for MCX755106AS-HEAT |

|---|---|

| Product Model / NIC Card | ConnectX-7 MCX755106AS-HEAT (market reference: mq9700) |

| Network Protocols | InfiniBand, Ethernet |

| Port Configuration & Speeds | Dual-port; InfiniBand: NDR 400Gb/s, HDR 200Gb/s, EDR 100Gb/s Ethernet: 400/200/100/50/25/10 Gigabit Ethernet |

| Host Interface | PCI Express 5.0 x16 |

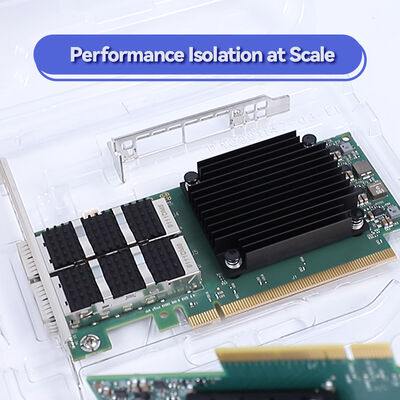

| Form Factor | Standard FHFL (Full Height, Full Length) PCIe Card |

| Key Acceleration Technologies | RDMA, RoCE v2, SR-IOV, NVMe-oF offload, In-Network Computing (SHARP) |

| Security Features | Hardware-accelerated IPsec, TLS 1.3, MACsec inline encryption/decryption |

| Operating System Support | Linux (RHEL, Ubuntu), Windows Server, VMware ESXi |

| Typical Use Case | High-performance computing clusters, AI/ML training platforms, cloud data center networking |

Advantages & Competitive Edge

- Industry-Leading Performance: Delivers double the throughput of previous-generation adapters with PCIe Gen5 interface eliminating host bottlenecks.

- Complete Workload Offload: Unlike conventional network cards, the ConnectX-7 offloads entire networking, storage, and security stacks to dedicated hardware.

- Future-Ready Architecture: Support for emerging NDR InfiniBand and 400GbE standards ensures long-term infrastructure viability.

- Deep Software Ecosystem Integration: Optimized for NVIDIA's computing ecosystem including GPUs (via GPUDirect), HPC libraries (NCCL, OpenMPI), and management frameworks.

- Operational Efficiency: Advanced power management and thermal design reduce total cost of ownership while maintaining peak performance.

Service & Support

We provide end-to-end support for the ConnectX-7 mq9700 network adapter:

- Warranty Coverage: Includes standard manufacturer warranty with optional extended service plans available.

- Inventory & Delivery: Maintain substantial stock for immediate shipment with reliable supply chain management.

- Technical Assistance: 24/7 customer support and engineering consultation from certified network specialists.

- Integration Support: Professional services available for system integration, driver configuration, and performance optimization.

Frequently Asked Questions

What server requirements are needed for the MCX755106AS-HEAT network card?

The adapter requires a PCIe Gen4 or Gen5 x16 slot for optimal performance. Ensure your server BIOS supports PCIe bifurcation if needed and has adequate cooling for high-performance components.

Can this NIC card operate in a mixed InfiniBand and Ethernet environment?

Each port can be independently configured for either InfiniBand or Ethernet operation via firmware settings, allowing flexible deployment in heterogeneous network environments.

Does the adapter support containerized environments like Kubernetes?

Yes, with appropriate drivers and Kubernetes CNI plugins, the ConnectX-7 can provide high-performance networking for container workloads, especially when using RDMA capabilities.

What are the primary use cases for the mq9700 adapter versus standard 100GbE cards?

The MCX755106AS-HEAT is designed for extreme performance scenarios: AI/ML distributed training, HPC simulations, financial trading platforms, and hyperscale cloud backbones where latency and throughput are critical.

Important Precautions

- System Compatibility: Verify server platform compatibility, particularly PCIe generation support and slot configuration requirements.

- Thermal Considerations: Ensure proper server chassis airflow design as high-performance network cards generate substantial thermal output.

- Software Requirements: Always install the latest stable drivers and firmware from NVIDIA's official support portal for optimal performance and security updates.

- Cable and Transceiver Compatibility: Use qualified optical modules or DAC/AEC cables rated for the target data rate (NDR/400GbE or lower compatible speeds).

- Security Configuration: When deploying hardware encryption features, implement proper key management policies and network security configurations.

Company Introduction

With more than ten years of specialized experience in network hardware distribution, we have built a reputation as a reliable provider of cutting-edge networking solutions. Our extensive manufacturing partnerships and technical expertise enable us to serve a global customer base with high-performance products like the ConnectX-7 NIC card.

As an authorized partner for leading technology brands including NVIDIA (Mellanox), Aruba, Extreme Networks, and Ruckus, we maintain substantial inventory of products such as the MCX755106AS-HEAT network card (also known as mq9700). This allows us to fulfill orders ranging from individual units to large-scale enterprise deployments with competitive pricing and guaranteed quality.

می خواهید اطلاعات بیشتری در مورد این محصول بدانید